Working with Data Files

Structured files that are intended for loading into a DX Graph Collection are referred to as Data Files. Data Files are uploaded into Buckets where they can be validated for correctness and loaded into a Collection. DX Graph provides multiple types of Jobs for working with data files.

All of the above Data File jobs perform validation. If validation errors are found during the validation of a Data File, a Validation Errors file is created with the following naming convention: {orginalFilename}.{YYYYMMDD_HHmmss}.errors.jsonl e.g. products.csv.20230926_160054.errors.jsonl.

The Validation Errors file will be generated alongside the original Data File in the Bucket.

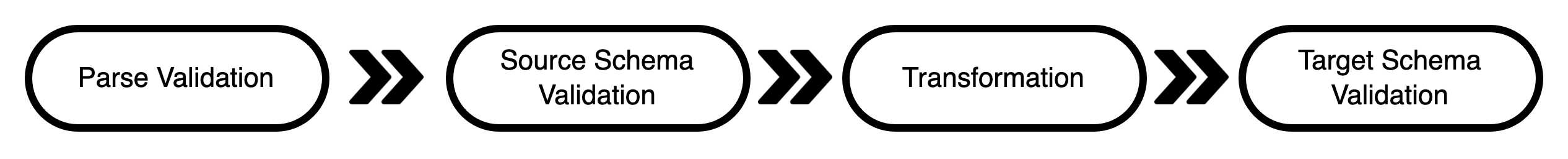

Validation consists of the following steps:

- Parse Validation: The Data File is parsed according to the specified

Parse Options. If the Data File cannot be parsed, the Data File is considered invalid. - Source Schema Validatiion: The parsed Data File is validated against the specified

Source Schema. If the Data File does not conform to the Source Schema, the Data File is considered invalid. (Optional.) - Transformation: While this step is not strictly a validation step, it is a step that is performed on the Data File. The Data File is transformed according to the specified

Transformers, which is a list of transforms that are applied to each record in the Data File. Each transformation may, optionally, have a schema associated with it. If a schema is specified, the transformed record is validated against the schema. If the transformed record does not conform to the schema, the Data File is considered invalid. (Optional.) - Target Schema Validation: The transformed Data File is validated against the specified

Target Schema. A Target Schema can be manually specified or, typically, taken from a Collection's schema. If the Data File does not conform to the Target Schema, the Data File is considered invalid. (Optional.)

Job Types

The various job types related to working with files are documented here.

Analyze Data File Endpoint

The Analyze Data File endpoint allows you to specify many of the parameters above and see the results immediately to help with the configuration of validation and import jobs. This API will return the following information:

| Field | Description |

|---|---|

| nbrIssues | The total number of issues found in the entire source file. |

| nbrFinalRecords | The total number of valid records in the entire source file. |

| issues | The first 10 records that have issues. |

| validRecords | The first 10 records that are valid. |

POST https://io.conscia.ai/vue/_api/v1/buckets/incoming/files/_analyze

content-type: application/json

Authorization: Bearer {{apiKey}}

X-Customer-Code: {{customerCode}}

{

"filename": "products.csv",

"sourceSchema": {

"type": "object",

"properties": {

"product_id": { "type": "string" },

"name": { "type": "string" },

"brand": { "type": "string" },

"price": { "type": "number" }

},

"required": ["product_id"]

},

"recordIdentifierField": "product_id",

"transformers": [

{

"type": "javascript",

"config": {

"expression": "_.set(data, 'category', 'electronics')",

}

}

],

"targetSchema": {

"type": "object",

"properties": {

"product_id": { "type": "string" },

"name": { "type": "string" },

"brand": { "type": "string" },

"price": { "type": "number" },

"category": { "type": "string" }

},

"required": ["product_id", "category"]

},

"parseOptions": {

"format": "DELIMITED",

"delimiter": ",",

"quoteChar": "\"",

"escapeChar": "\"",

},

"collectionCode": "product"

}

Common Data File Job Type Parameters

Parse Options

The following are the available parse options:

| Parameter | Description |

|---|---|

| format | The format of the file. One of DELIMITED, EXCEL, JSONL. |

| delimiter | The delimiter used to separate fields. Applicable for DELIMITED. |

| quoteChar | The character used to quote fields. Applicable for DELIMITED. |

| escapeChar | The character used to escape quotes. Applicable for DELIMITED. |

| sheetName | The name of the sheet to import. Only applicable for EXCEL. |

Filename Patterns

Filename patterns are used to group files together for processing.

Matching Features

- Wildcards (*, *.js)

- Negation (!a*.js, *!(b).js)

- Extglobs (+(x|y), !(a|b))

- Brace expansion (foo/{1..5}.md, bar/{a,b,c}.js)

- Regex character classes (foo-[1-5].js)

- Regex logical "or" (foo_(abc|xyz).js)

| Example Pattern | Matches |

|---|---|

| *.js | All files with the extension .js |

| (x|y) | All files with the name x or y |

| !(a|b) | All files except a or b |

| foo_{1..5}.md | All files with the name foo_1.md, foo_2.md, foo_3.md, foo_4.md, foo_5.md |

| bar_{a,b,c}.js | All files with the name bar_a.js, bar_b.js, bar_c.js |

| foo-[1-5].js | All files with the name foo-1.js, foo-2.js, foo-3.js, foo-4.js, foo-5.js |

| foo_(abc|xyz).js | All files with the name foo_abc.js or foo_xyz.js |

Transformers

Transformers are used to transform records in a Data File. Transformers are specified as a list of transforms that are applied to each record in the Data File. Transformations may include setting additional data or metadata, concatenating or splitting fields, converting data types, renaming properties, or picking specific fields to import.

Each transformation may, optionally, have a schema associated with it. If a schema is specified, the transformed record is validated against the schema. If the transformed record does not conform to the schema, the Data File is considered invalid.

The following are the available transformers:

Javascript

The Javascript transformer allows you to specify a Javascript expression that is applied to each record in the Data File. The expression is evaluated using the following variables:

data: The record being transformed.dayjs: The dayjs library._: The lodash library.

{

"type": "javascript",

"config": {

"expression": "_.set(data, 'category', 'electronics')",

}

}

The following are the available configuration options:

| Parameter | Description |

|---|---|

| expression | The Javascript expression to evaluate. |

Each expression must return a JSON object. The returned object is the transformed record.

JSONata

The JSONata transformer allows you to specify a JSONata expression that is applied to each record in the Data File. The expression is evaluated using the following variables:

data: The record being transformed.

{

"type": "jsonata",

"config": {

"expression": "$extend(data, { category: 'electronics' })",

}

}

The following are the available configuration options:

| Parameter | Description |

|---|---|

| expression | The JSONata expression to evaluate. |

Each expression must return a JSON object. The returned object is the transformed record.

Record Identifier Field

The recordIdentifierField indicates which field uniquely identifies the records in the Data Files. The data in the field that you select to be the unique identifier for your Collection must conform to the following character specifications:

- String

- 250 character limit

- Alpha (lowercase and uppercase)

- Numeric digit

_-.@()+,=;$!*'%- No whitespace

Error Files

Error files are generated when a Data File is validated, imported or transformed. Error files are created as both JSONL (where each error is one JSON on one line) and CSV files. The CSV files are created for convenience and are not intended to be used for further processing. The filename of the error file is the same as the original Data File with the following suffix: {{sourceFilename}}-YYYYMMDD_HHmmss.errors.jsonl or .csv where YYYYMMDD_HHmmss is the timestamp of when the error file was generated.

The fields of an error file are as follows:

| Field | Description |

|---|---|

| errorType | String. One of: INVALID_HEADER_PARSE (expected headers were not found), INVALID_ROW_PARSE (could not parse a line of data), INVALID_ROW_SCHEMA (parsed record did not conform to specified), TRANSFORMATION_ERROR (error occurred during transformation). |

| rowNbr | Number. If available, the row number of the file that caused the error. |

| recordAsString | String. If available, the line that caused the error. |

| parsedFields | The list of fields that were parsed. |

| errorMessage | String. Description of what the error is. |

| recordIdentifier | The unique id of the parsed record. |

| recordAsJson | Object. If available, the parsed line that caused the error. |

| transformationIndex | Number. The index of transformation that caused the error. |

| preTransformedObject | Object. The record before transformation. |

| postTransformedObject | Object. The record after transformation. |