Loading Data into Collections

In this tutorial, section, we will load data into the Collections that we created in Creating Collections.

For a more detailed explanation of the data loading process, see Working with Data Files.

Creating Buckets

We will create the following buckets:

- Incoming

- Processed

- Invalidated

Using the API

The following API calls will create the buckets:

PUT https://io.conscia.ai/vue/_api/v1/buckets/incoming

content-type: application/json

Authorization: Bearer {{apiKey}}

X-Customer-Code: {{customerCode}}

{

"dataBucketEntry": {

"name": "Incoming"

}

}

PUT https://io.conscia.ai/vue/_api/v1/buckets/processed

content-type: application/json

Authorization: Bearer {{apiKey}}

X-Customer-Code: {{customerCode}}

{

"dataBucketEntry": {

"name": "Processed"

}

}

PUT https://io.conscia.ai/vue/_api/v1/buckets/invalidated

content-type: application/json

Authorization: Bearer {{apiKey}}

X-Customer-Code: {{customerCode}}

{

"dataBucketEntry": {

"name": "Invalidated"

}

}

Uploading Data

We will upload the movies and actors CSV files to the incoming bucket.

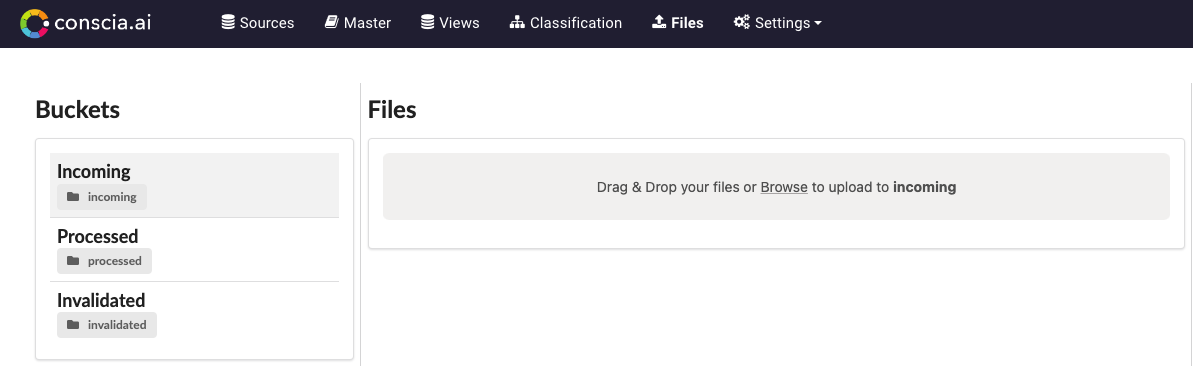

Using the UI

Navigate to the Files page and, in the left-pane, select the Incoming bucket (that was created above). To upload the movies.csv and actors.csv files, either drag and drop from them from your local machine or click Browse to locate and select them.

Using the API

curl --request POST \

--url https://io.conscia.ai/vue/_api/v1/buckets/incoming/upload \

--header 'authorization: Bearer {{apiKey}}' \

--header 'X-Customer-Code: {{customerCode}}' \

--form 'file[]=@movies.csv' \

--form 'file[]=@actors.csv'

The response be:

[

{

"filename": "movies.csv",

"message": "Successfully uploaded."

},

{

"filename": "actors.csv",

"message": "Successfully uploaded."

}

]

Importing Data Files

When importing a file you specify:

- How to parse the file (referred to as Parse Options)

- The expected schema of the file to validate against (i.e. how the data should be structured)

- How to transform the data from the file into the expected schema (if required)

The Parse Options for both the movies.csv and actors.csv files:

{

"format": "DELIMITED",

"delimiter": ",",

"quoteChar": "\"",

"escapeChar": "\""

}

Import Movies

The following table lists the fields of the movie collections and how the data will be parsed and/or transformed from the movies.csv file. It is based on the movie collection created in the Creating Collections tutorial.

| Target Field | Source Field(s) | Required Transformation |

|---|---|---|

| movie_id | movie_id | This is a required field. |

| title | title | This is a required field. |

| year | year | Ensure that the value is a number. |

| runtime | runtime | Remove \N values. Ensure that the value is a number. |

| genres | genres | Transform comma-delimited list of genres into an array. |

| actor_ids | actor_ids | Transform comma-delimited list of genres into an array. |

Source Schema

The Source Schema is a JSON schema that describes the data in the CSV file. It does not have to match the schema of the collection. In fact, it is common for the Source Schema to be different from the schema of the collection. Every row in a delimited file is converted into a JSON object. The Source Schema describes the structure of that JSON object.

Consider the following line of the movies CSV file:

tt10969190,Stick Monster Movie,2020,\N,Fantasy,"nm8762035,nm4027115"

The JSON representation of this line is:

{

"movie_id": "tt10969190",

"title": "Stick Monster Movie",

"year": "2020",

"runtime": "\\N",

"genres": "Fantasy",

"actor_ids": "nm8762035,nm4027115"

}

The Source Schema for the movies.csv file is:

{

"type": "object",

"properties": {

"movie_id": { "type": "string" },

"title": { "type": "string" },

"year": { "type": "number" },

"runtime": { "type": "string" },

"genres": { "type": "string" },

"actor_ids": { "type": "string" }

},

"required": ["movie_id", "title"]

}

We can make year a number since we know that every value will be a numeric value. The JSON schema check will coerce the value to a number. If it is unable to coerce the value to a number, it will fail the validation. Otherwise, this field will not need to be transformed to a number since it was already coerced to a number.

We specified runtime to be a string even though we want to convert it to a number. We could made this a number if we knew that the value would always be a number. However, we know that this field contains the value \N which is not a number.

Transformers

See this for more details on transformers.

The transformers specification for the movies file is:

[

{ "type": "javascript", "config": { "expression": "_.set(data, 'runtime', data.runtime==='\\\\N' ? undefined : _.toNumber(data.runtime) )" } },

{ "type": "javascript", "config": { "expression": "_.set(data, 'genres', _.split(data.genres, ','))" } },

{ "type": "javascript", "config": { "expression": "_.set(data, 'actor_ids', _.split(data.actor_ids, ','))" } }

]

Remember, the data variable in the expression represents the JSON object that represents the current row in the CSV file. The data variable is a JavaScript object. The _.set function is a lodash function that allows you to set a value in a JavaScript object. The first argument is the object that you want to set the value on. The second argument is the path to the value that you want to set. The third argument is the value that you want to set. The _.split function is a lodash function that allows you to split a string into an array. The first argument is the string that you want to split. The second argument is the delimiter that you want to use to split the string.

\N in the CSV file is turned into { "runtime": "\\N" } in JSON format since the backslash is escaped. Above, we check set runtime to undefined (which removes runtime from the JSON object) if it has a value of \\N. We must, again, escape the backslash since it is a special character in JavaScript.

Analyze the configurations

We will use the Data File Analyze endpoint to analyze the configurations used to load the movies.csv file.

POST https://io.conscia.ai/vue/_api/v1/buckets/incoming/files/_analyze

content-type: application/json

Authorization: Bearer {{apiKey}}

X-Customer-Code: {{customerCode}}

{

"filename": "movies.csv",

"sourceSchema": {

"type": "object",

"properties": {

"movie_id": { "type": "string" },

"title": { "type": "string" },

"year": { "type": "number" },

"runtime": { "type": "string" },

"genres": { "type": "string" },

"actor_ids": { "type": "string" }

},

"required": ["movie_id", "title"]

},

"recordIdentifierField": "movie_id",

"transformers": [

{ "type": "javascript", "config": { "expression": "_.set(data, 'runtime', data.runtime==='\\N' ? undefined : _.toNumber(data.runtime) )" } },

{ "type": "javascript", "config": { "expression": "_.set(data, 'genres', _.split(data.genres, ','))" } },

{ "type": "javascript", "config": { "expression": "_.set(data, 'actor_ids', _.split(data.actor_ids, ','))" } }

],

"parseOptions": {

"format": "DELIMITED",

"delimiter": ",",

"quoteChar": "\"",

"escapeChar": "\""

}

}

The result of the above call to the analyze endpoint will look like this:

{

"nbrIssues": 0,

"nbrFinalRecords": 11573,

"issues": [],

"validRecords": [

{

"recordIdentifier": "tt10396038",

"recordAsJson": {

"movie_id": "tt10396038",

"title": "Neither Hero Nor Traitor",

"year": 2020,

"runtime": 73,

"genres": [

"Drama",

"History"

],

"actor_ids": [

"nm6286339",

"nm0261957",

"nm8358700",

"nm7506705"

]

}

},

{

"recordIdentifier": "tt10397306",

"recordAsJson": {

"movie_id": "tt10397306",

"title": "Madison",

"year": 2020,

"runtime": 87,

"genres": [

"Adventure",

"Drama",

"Family"

],

"actor_ids": [

"nm10055076",

"nm0525518",

"nm0913217",

"nm12691322"

]

}

},

{

"recordIdentifier": "tt10397752",

"recordAsJson": {

"movie_id": "tt10397752",

"title": "Disordered",

"year": 2020,

"runtime": 89,

"genres": [

"Animation",

"Documentary",

"Family"

],

"actor_ids": [

"nm11496979"

]

}

},

{

"recordIdentifier": "tt10414756",

"recordAsJson": {

"movie_id": "tt10414756",

"title": "Party Hard",

"year": 2020,

"runtime": 103,

"genres": [

"Comedy",

"Drama"

],

"actor_ids": [

"nm6073478",

"nm10731923",

"nm10731924",

"nm4581810"

]

}

},

{

"recordIdentifier": "tt10423160",

"recordAsJson": {

"movie_id": "tt10423160",

"title": "Kaiji: Final Game",

"year": 2020,

"runtime": 128,

"genres": [

"Adventure"

],

"actor_ids": [

"nm7139946",

"nm0297885",

"nm4445437",

"nm3810403",

"nm5018289",

"nm2968873",

"nm4341140",

"nm10421125"

]

}

},

{

"recordIdentifier": "tt10935362",

"recordAsJson": {

"movie_id": "tt10935362",

"title": "The Demon of Daingerfield",

"year": 2020,

"genres": [

"Horror"

],

"actor_ids": [

"nm4049779"

]

}

},

{

"recordIdentifier": "tt10936214",

"recordAsJson": {

"movie_id": "tt10936214",

"title": "Bring 'em Black",

"year": 2020,

"runtime": 120,

"genres": [

"Western"

],

"actor_ids": [

"nm1253986",

"nm9555778",

"nm8531011",

"nm5274937"

]

}

},

{

"recordIdentifier": "tt10937670",

"recordAsJson": {

"movie_id": "tt10937670",

"title": "Figli",

"year": 2020,

"runtime": 97,

"genres": [

"Comedy"

],

"actor_ids": [

"nm0348347",

"nm0181246",

"nm0557609",

"nm2862095"

]

}

},

{

"recordIdentifier": "tt10943622",

"recordAsJson": {

"movie_id": "tt10943622",

"title": "This Is Not Normal",

"year": 2020,

"genres": [

"Documentary"

],

"actor_ids": [

"nm1171860",

"nm1544202"

]

}

},

{

"recordIdentifier": "tt10944276",

"recordAsJson": {

"movie_id": "tt10944276",

"title": "Death of a Telemarketer",

"year": 2020,

"runtime": 88,

"genres": [

"Comedy",

"Drama",

"Thriller"

],

"actor_ids": [

"nm2031358",

"nm0355097",

"nm0005286",

"nm5131141"

]

}

}

]

}

As explained here, the validRecords field contains the first 10 valid records in the file.

Performing the Import

POST https://io.conscia.ai/vue/_api/v1/buckets/incoming/files/_import

content-type: application/json

Authorization: Bearer {{apiKey}}

X-Customer-Code: {{customerCode}}

{

"skippedBucketCode": "skipped",

"processedBucketCode": "processed",

"invalidBucketCode": "invalidated",

"filenamePattern": "movies.csv",

"skipInvalidRecords": false,

"sourceSchema": {

"type": "object",

"properties": {

"movie_id": { "type": "string" },

"title": { "type": "string" },

"year": { "type": "number" },

"runtime": { "type": "string" },

"genres": { "type": "string" },

"actor_ids": { "type": "string" }

},

"required": ["movie_id", "title"]

},

"recordIdentifierField": "movie_id",

"transformers": [

{ "type": "javascript", "config": { "expression": "_.set(data, 'runtime', data.runtime==='\\\\N' ? undefined : _.toNumber(data.runtime) )" } },

{ "type": "javascript", "config": { "expression": "_.set(data, 'genres', _.split(data.genres, ','))" } },

{ "type": "javascript", "config": { "expression": "_.set(data, 'actor_ids', _.split(data.actor_ids, ','))" } }

],

"collectionCode": "movie",

"parseOptions": {

"format": "DELIMITED",

"delimiter": ",",

"quoteChar": "\"",

"escapeChar": "\""

}

}

The result should look like this:

{

"filenamesToProcess": [

"movies.csv"

],

"filenamesToSkip": [],

"processedFiles": [

{

"filename": "movies.csv",

"nbrValidRecords": 11573,

"nbrValidationIsses": 0,

"nbrInserts": 11573,

"nbrUpdates": 0,

"message": "Successfully loaded file: movies.csv into movie. "

}

],

"hasInvalidRecords": false

}

Using the UI, navigate to the Processed bucket, you should see the movies.csv file. The movies.csv file will no longer be in the Incoming bucket. If there were any invalid records, they would be in the Invalidated bucket.

Upload the movies.csv file to the incoming bucket, again.

curl --request POST \

--url https://io.conscia.ai/vue/_api/v1/buckets/incoming/upload \

--header 'authorization: Bearer {{apiKey}}' \

--header 'X-Customer-Code: {{customerCode}}' \

--form 'file[]=@movies.csv'

Now lets re-import with a configuration with known issues. We will convert runtime to a number even though we know that some values are \N. We have to set skipInvalidRecords to true since we know that there are invalid records and we still want to import the valid records.

POST https://io.conscia.ai/vue/_api/v1/buckets/incoming/files/_import

content-type: application/json

Authorization: Bearer {{apiKey}}

X-Customer-Code: {{customerCode}}

{

"skippedBucketCode": "skipped",

"processedBucketCode": "processed",

"invalidBucketCode": "invalidated",

"filenamePattern": "movies.csv",

"skipInvalidRecords": true,

"sourceSchema": {

"type": "object",

"properties": {

"movie_id": { "type": "string" },

"title": { "type": "string" },

"year": { "type": "number" },

"runtime": { "type": "string" },

"genres": { "type": "string" },

"actor_ids": { "type": "string" }

},

"required": ["movie_id", "title"]

},

"recordIdentifierField": "movie_id",

"transformers": [

{ "type": "javascript", "config": { "expression": "_.set(data, 'runtime', _.toNumber(data.runtime) )" } },

{ "type": "javascript", "config": { "expression": "_.set(data, 'genres', _.split(data.genres, ','))" } },

{ "type": "javascript", "config": { "expression": "_.set(data, 'actor_ids', _.split(data.actor_ids, ','))" } }

],

"collectionCode": "movie",

"parseOptions": {

"format": "DELIMITED",

"delimiter": ",",

"quoteChar": "\"",

"escapeChar": "\""

}

}

The result will be:

{

"filenamesToProcess": [

"movies.csv"

],

"filenamesToSkip": [],

"processedFiles": [

{

"filename": "movies.csv",

"nbrValidRecords": 8619,

"nbrValidationIsses": 2954,

"nbrInserts": 0,

"nbrUpdates": 8619,

"message": "Successfully loaded file: movies.csv into movie. There were some invalid records."

}

],

"hasInvalidRecords": true

}

The existing movie records were updated with the 8,619 valid records. The 2,954 errors are detailed in the files: movies.csv-YYYMMMDD-HHmmss.errors.csv and movies.csv-YYYMMMDD-HHmmss.errors.jsonl which were copied to the processed bucket alongside the original movies.csv file. These two files are described here.

Query the movie collection

POST https://io.conscia.ai/vue/_api/v1/collections/movie/records/_query

Content-Type: application/json

Authorization: Bearer {{apiKey}}

X-Customer-Code: {{customerCode}}

{}

Importing Actors

The following table lists the fields of the actor collections and how the data will be parsed and/or transformed from the actors.csv file. It is based on the actor collection create in the Creating Collections tutorial.

| Target Field | Source Field(s) | Required Transformation |

|---|---|---|

| actor_id | actor_id | This is a required field. |

| name | name | This is a required field. |

| birth_year | birthYear | Remove \N values. Ensure that the value is a number. Rename field. |

| death_year | deathYear | Remove \N values. Ensure that the value is a number. Rename field. |

| professions | primaryProfession | Remove \N values. Transform comma-delimited list of professions into an array. Rename field. |

Source Schema

The Source Schema for the actors.csv file is:

{

"type": "object",

"properties": {

"actor_id": { "type": "string" },

"name": { "type": "string" },

"birthYear": { "type": "string" },

"deathYear": { "type": "string" },

"primaryProfession": { "type": "string" }

},

"required": ["actor_id", "name"]

}

Transformers

The transformers specification for the actors file is:

[

{ "type": "javascript", "config": { "expression": "_.set(data, 'birth_year', data.birthYear==='\\\\N' ? undefined : _.toNumber(data.birthYear))" } },

{ "type": "javascript", "config": { "expression": "_.set(data, 'death_year', data.deathYear==='\\\\N' ? undefined : _.toNumber(data.deathYear))" } },

{ "type": "javascript", "config": { "expression": "_.set(data, 'professions', _.split(data.primaryProfession, ','))" } },

{ "type": "javascript", "config": { "expression": "_.omit(data, ['birthYear', 'deathYear', 'primaryProfession'])" } }

]

Analyze the configurations

We will use the Data File Analyze endpoint to analyze the configurations used to load the actors.csv file.

POST https://io.conscia.ai/vue/_api/v1/buckets/incoming/files/_analyze

content-type: application/json

Authorization: Bearer {{apiKey}}

X-Customer-Code: {{customerCode}}

{

"filename": "actors.csv",

"sourceSchema": {

"type": "object",

"properties": {

"actor_id": { "type": "string" },

"name": { "type": "string" },

"birthYear": { "type": "string" },

"deathYear": { "type": "string" },

"primaryProfession": { "type": "string" }

},

"required": ["actor_id", "name"]

},

"recordIdentifierField": "actor_id",

"transformers": [

{ "type": "javascript", "config": { "expression": "_.set(data, 'birth_year', data.birthYear==='\\\\N' ? undefined : _.toNumber(data.birthYear))" } },

{ "type": "javascript", "config": { "expression": "_.set(data, 'death_year', data.deathYear==='\\\\N' ? undefined : _.toNumber(data.deathYear))" } },

{ "type": "javascript", "config": { "expression": "_.set(data, 'professions', _.split(data.primaryProfession, ','))" } },

{ "type": "javascript", "config": { "expression": "_.omit(data, ['birthYear', 'deathYear', 'primaryProfession'])" } }

],

"collectionCode": "actor",

"parseOptions": {

"format": "DELIMITED",

"delimiter": ",",

"quoteChar": "\"",

"escapeChar": "\""

}

}

The result of the above call to the analyze endpoint will look like this:

{

"nbrIssues": 0,

"nbrFinalRecords": 40151,

"issues": [],

"validRecords": [

{

"actor_id": "nm0130335",

"name": "Manuel Callau",

"birth_year": 1946,

"professions": [

"actor"

]

},

{

"actor_id": "nm0130429",

"name": "Luis Callejo",

"birth_year": 1970,

"professions": [

"actor",

"producer"

]

},

{

"actor_id": "nm0130435",

"name": "María Fernanda Callejón",

"professions": [

"actress"

]

},

{

"actor_id": "nm0130438",

"name": "Christopher Callen",

"professions": [

"actress",

"soundtrack"

]

},

{

"actor_id": "nm0130502",

"name": "Dayton Callie",

"birth_year": 1946,

"professions": [

"actor",

"writer",

"producer"

]

},

{

"actor_id": "nm0130536",

"name": "James Callis",

"birth_year": 1971,

"professions": [

"actor",

"writer",

"producer"

]

},

{

"actor_id": "nm0130736",

"name": "Daniel Caltagirone",

"birth_year": 1972,

"professions": [

"actor"

]

},

{

"actor_id": "nm0130860",

"name": "Mhairi Calvey",

"birth_year": 1988,

"professions": [

"actress",

"producer",

"writer"

]

},

{

"actor_id": "nm0131060",

"name": "Alejandro Camacho",

"birth_year": 1954,

"professions": [

"actor",

"producer",

"director"

]

},

{

"actor_id": "nm0131246",

"name": "Felipe Camargo",

"birth_year": 1960,

"professions": [

"actor",

"assistant_director"

]

}

]

}

Performing the Import

POST https://io.conscia.ai/vue/_api/v1/buckets/incoming/files/_import

content-type: application/json

Authorization: Bearer {{apiKey}}

X-Customer-Code: {{customerCode}}

{

"skippedBucketCode": "skipped",

"processedBucketCode": "processed",

"invalidBucketCode": "invalidated",

"filenamePattern": "actors.csv",

"sourceSchema": {

"type": "object",

"properties": {

"actor_id": { "type": "string" },

"name": { "type": "string" },

"birthYear": { "type": "string" },

"deathYear": { "type": "string" },

"primaryProfession": { "type": "string" }

},

"required": ["actor_id", "name"]

},

"recordIdentifierField": "actor_id",

"transformers": [

{ "type": "javascript", "config": { "expression": "_.set(data, 'birth_year', data.birthYear==='\\\\N' ? undefined : _.toNumber(data.birthYear))" } },

{ "type": "javascript", "config": { "expression": "_.set(data, 'death_year', data.deathYear==='\\\\N' ? undefined : _.toNumber(data.deathYear))" } },

{ "type": "javascript", "config": { "expression": "_.set(data, 'professions', _.split(data.primaryProfession, ','))" } },

{ "type": "javascript", "config": { "expression": "_.omit(data, ['birthYear', 'deathYear', 'primaryProfession'])" } }

],

"collectionCode": "actor",

"parseOptions": {

"format": "DELIMITED",

"delimiter": ",",

"quoteChar": "\"",

"escapeChar": "\""

}

}

The result should look like this:

{

"filenamesToProcess": [

"actors.csv"

],

"filenamesToSkip": [],

"processedFiles": [

{

"filename": "actors.csv",

"nbrValidRecords": 40151,

"nbrValidationIsses": 0,

"nbrInserts": 40151,

"nbrUpdates": 0,

"message": "Successfully loaded file: actors.csv into actor. "

}

],

"hasInvalidRecords": false

}